Request Information

Ready to find out what MSU Denver can do for you? We’ve got you covered.

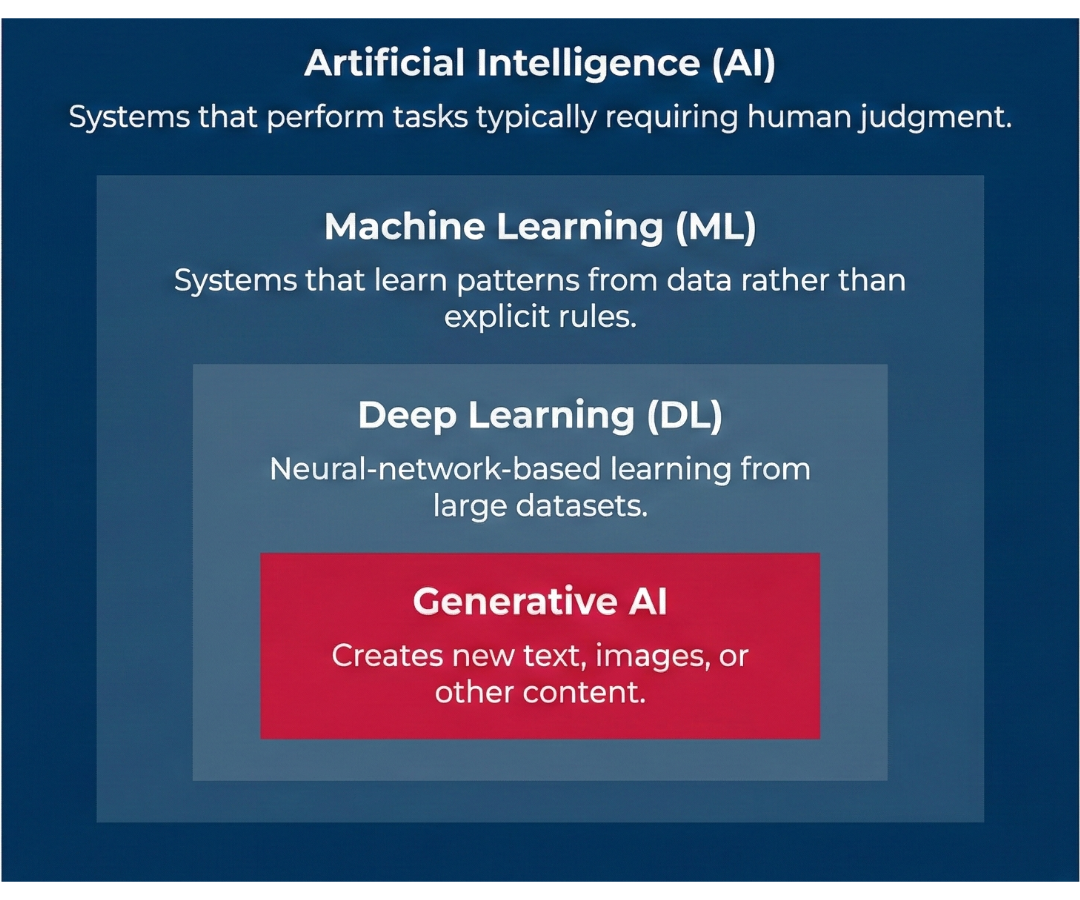

Artificial intelligence (AI) is a broad term for computer systems that can recognize patterns, make predictions, or generate content. This page gives MSU Denver faculty, staff, and students a shared vocabulary for AI, and a plain-language explanation of what makes generative AI different and how it produces outputs.

If you want practical guidance on classwork, assignments, and responsible use, visit the Teaching & Learning with AI and Student Resources pages.

The diagram below provides a simplified view of how artificial intelligence, machine learning, deep learning, and generative AI relate to one another.

Generative AI differs from many earlier AI tools in three practical ways:

Important note: Generative AI can produce outputs that are fluent and persuasive while still being incorrect. Treat it as a draft-and-assist tool, not an authority.

The model is trained on large amounts of text to learn patterns in language, then tuned to better follow instructions (prompts) and apply safety constraints.

When you enter a prompt, the system converts text into smaller units (often called tokens) and uses your prompt as the context for generating a response.

The model predicts what should come next based on probabilities. It then adds the next token, and repeats, building an answer step-by-step.

Because the system is generating likely language (not checking an external truth source by default), verification remains the user’s responsibility.

Generative AI can be useful as a support tool for early-stage thinking and revision. It works best when the goal is to explore ideas, improve clarity, or generate ideas rather than to produce final or authoritative work.

Generative AI is often helpful for:

Brainstorming, outlining, and early drafting: Generating starting points, organizing ideas, or suggesting possible directions when facing a blank page.

Revising tone, structure, and clarity: Rewriting text in plain language, adjusting tone for different audiences, or creating concise summaries from longer material.

Generating examples or practice materials: Producing sample explanations, illustrative examples, practice questions, or other low-stakes learning supports.

Summarizing provided material: Identifying key points, themes, or gaps when source text is supplied, especially when asked to note what may be missing or unclear.

Generative AI is not appropriate for tasks that require high levels of accuracy, authority, or human judgment. In these situations, reliance on AI outputs can introduce risk or error.

Generative AI does not work well when:

Factual accuracy is essential: AI may produce confident-sounding responses that are incorrect, incomplete, or fabricated. Facts, statistics, and references must be verified using trusted sources.

Authoritative or final decisions are required: Generative AI should not be the sole basis for grading, employment actions, or legal, medical, or financial decisions.

Sensitive or protected information is involved: Do not enter student records, employee data, health information, or other confidential data (i.e., Personally Identifiable Information or PII) into AI tools unless explicitly approved.

Original scholarly or creative attribution is required: Generative AI cannot reliably distinguish original ideas from learned patterns and may unintentionally reproduce existing work. Use caution.

Context, judgment, or lived experience is central:Generative AI lacks human understanding of intent, values, and situational nuance; tasks requiring ethical or disciplinary judgment require human oversight.

Citations or sources must be reliable: Generative AI may invent or misattribute sources. Any citations it provides must be independently verified.

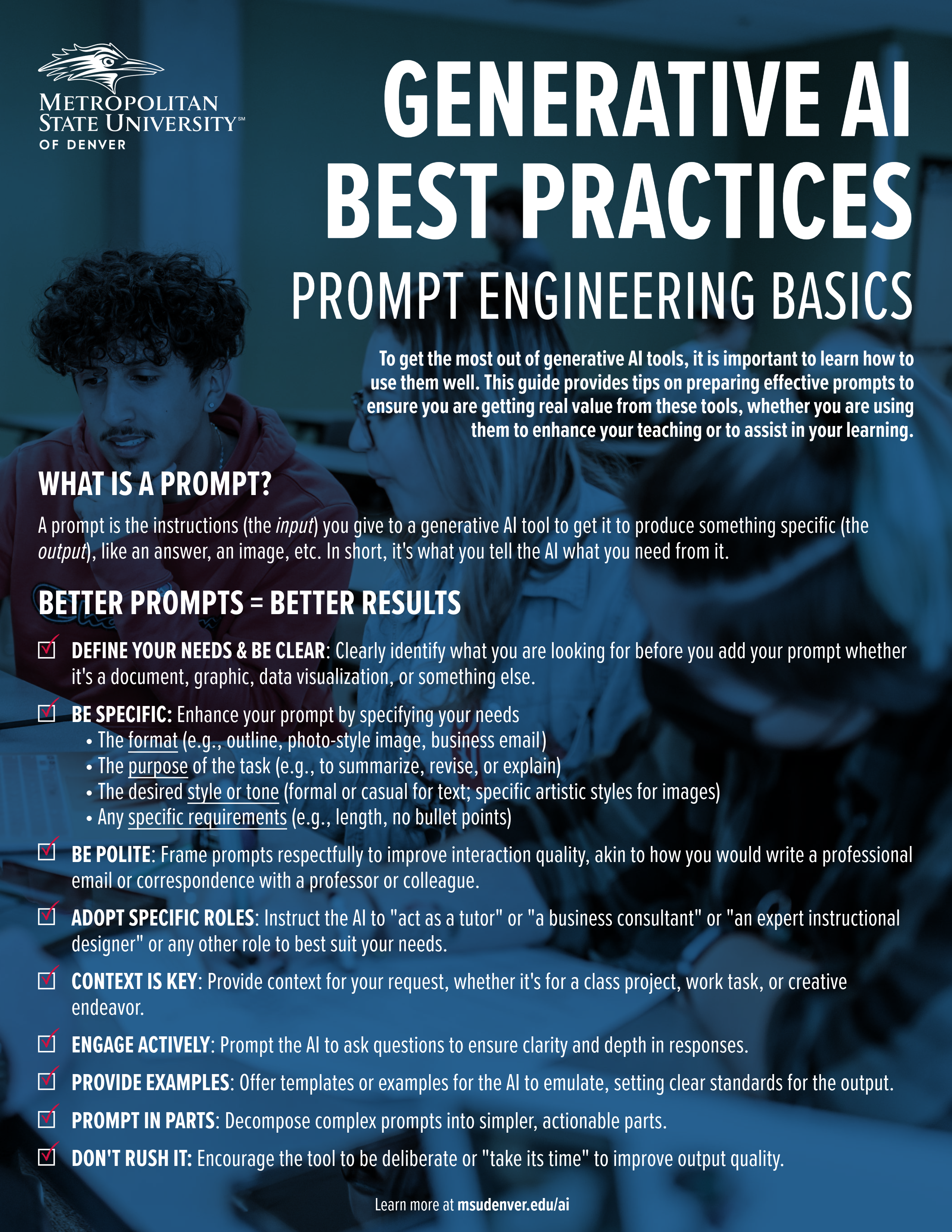

Want more? Check out the Prompt Engineering Guide, a resource for beginners through advanced users. It starts with the basics and builds toward more advanced prompting techniques.

An A-to-Z Guide for Creating and Using PromptsThe videos below offer approachable explanations of how AI and generative AI work, drawn from trusted organizations and academic sources.

This video provides a clear, non-technical overview of how artificial intelligence, machine learning, deep learning, and generative AI relate to one another. It is especially useful for building a shared vocabulary and understanding how generative AI fits within the broader AI landscape.

This video explains what generative AI models are and how they differ from earlier AI systems that primarily classify or predict. It focuses on how these models create new content, such as text or images, by learning patterns from large datasets.

This video offers an accessible explanation of how chatbots and large language models generate responses using patterns in language. It is well suited for learners who want a conceptual understanding of how tools like ChatGPT work without requiring technical background.

This explainer introduces the concept of foundation models and why they matter in the current AI landscape. It provides a higher-level academic perspective on how large, general-purpose models underpin many modern AI applications, including generative AI.

This video explains why large language models can produce incorrect or fabricated information despite sounding confident. It is particularly useful for understanding the limitations of generative AI and why verification and human judgment remain essential.